Google’s Gemini 3.1 Pro AI Faces Criticism for Slow Response Times

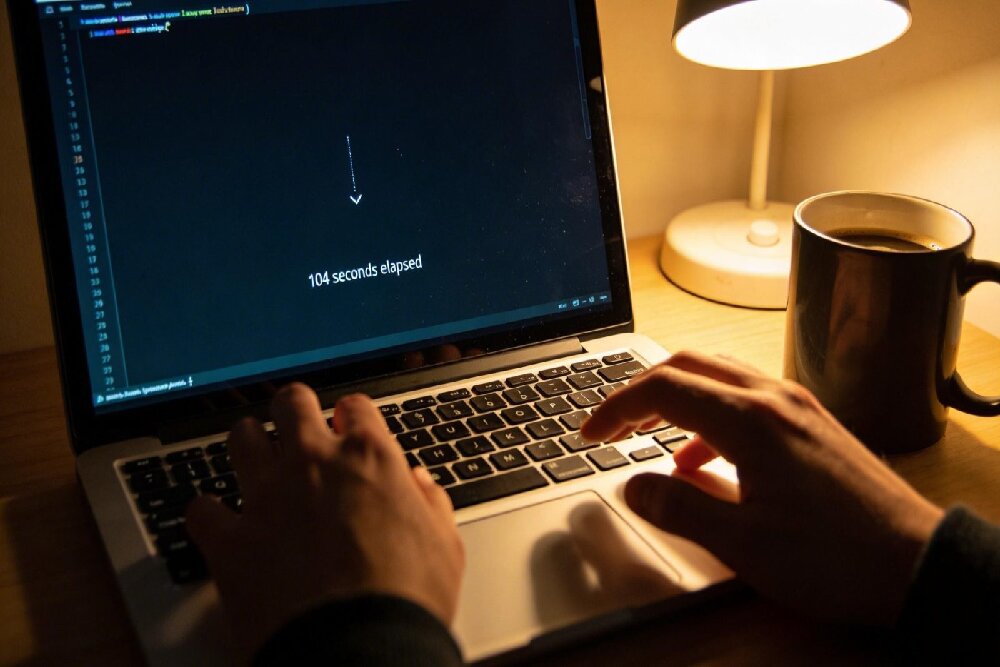

According to the Economic Desk of Webangah News Agency, Google’s new AI model, Gemini 3.1 Pro, has experienced considerable performance issues upon its release, with some users reporting that it took 104 seconds to respond to the word “hello.” This delay has rendered the model impractical for developers who require timely processing.

Gemini 3.1 Pro was launched on February 19th with strong benchmark scores in reasoning, but its actual performance in real-world applications has been significantly hindered by lengthy processing times and an inability to execute basic commands without minutes of delay. While Google has focused on optimizing benchmark performance, the model’s behavior is disrupting production environments.

The issue is notable because benchmark scores have become a significant focus in the AI industry, and Gemini 3.1 Pro’s scores indicated it could compete with leading models from Anthropic and OpenAI. However, early users received a preview version that struggles with code generation and exhibits extremely slow response times, such as taking nearly two minutes for a simple greeting.

Technical specifications for Gemini 3.1 Pro are impressive, featuring a one million token input window, a 64,000 token output capacity, and a score of 77.1% on the ARC-AGI-2 benchmark, placing it ahead of other generative models. Google’s official announcements highlighted the model’s capabilities in solving complex problems and its agentic performance, but they did not address the extensive reasoning time, which makes it unsuitable for interactive development.

Simon Willison, a programmer from the UK, reported the 104-second response time shortly after the model’s release. Online forums are filled with complaints about the sluggish performance and expired error messages. One user posted in a Google AI forum, “Google team! Please roll back this update. This (update) is completely broken. Run the build project. This (problem) will never end and it’s not good for the trust of early users.”

User trust is paramount, and early adopters expected a seamless upgrade to a more intelligent Gemini 3 Pro capable of handling more complex tasks. Instead, they received a fundamentally different product that requires significant adjustments to existing workflows.

Analysis indicates that Gemini 3.1 Pro is priced competitively, at $2 per one million input tokens and $12 per one million output tokens, approximately half the cost of Anthropic’s latest flagship model. However, this pricing advantage is undermined by the excessive response times.

The complaints highlight the true cost: developers are not just frustrated by slow responses but by a loss of trust. They upgraded the model expecting improved performance and received one that cannot perform basic functions, indicating a product mismatch rather than a mere performance issue.